A Python to Rust Journey: Part 1 – Optimizing Python XML Parsing

Thus my journey into Rust begins... promises of "Significant" performance to be discovered!

DEV DIARY

Allen Frasier

5/13/20245 min read

The Challenge

Last autumn, I embraced a challenge posed by Python and Rust enthusiasts at a local data science and engineering meetup. The task? Elevate the performance of an XML parser I had crafted in Python back in early 2023. I use the parser to do exploratory data analysis of some financial data feeds that are provided in xml.

I was happy with the performance in testing scenarios using small xml files of 10-25MB but once the file sizes got past 500MB and reached 2GB, the maximum possible - the processing became incredibly slow and took an incredible amount of server resources (CPU & memory) to complete. I had begun to horizontally scale the parsing but the complexity of it was nagging at me, and my Rustacean friends were insistent that I could get better processing performance by going to Rust and optimizing the solution via software before going crazy with hardware.

So after a month of adventures in distributing work packages with Docker and a nibble at Kubernetes that became frustrating exercises in data re-construction and running docker with sudo, I decided to take up the Rust challenge.

The TLDR:

Start performance: 180 xml nests/sec

End performance: 17,252 xml nests/sec

While I loved the idea of staying in a single language code-base for the entire project, sometimes you just have to let it go and use the best language that serves the task at hand.

If you have a processing performance problem with Python, before going horizontal and creating a mega complexity headache – refactor your code into a compiled language. Wink, wink Rust. You might be able to get the performance you want without horizontal data integrity complexity.

Rust is an amazing language and I’m glad I went through this process – and look forward to getting better with it in the future.

Python is still my go to workhorse for MVP’s and exploratory data analysis. With Apache Airflow, it's just too easy to make incredible data pipelines.

The Python Parser

Before discussing Rust, a little background on what I did with Python. My initial parser used the Python xml minidom to load the entire xml document into memory before parsing the data and converting it into useful pandas dataframes. It was modular, using a few modules that provided a handful of classes that modelled the xml data nests. Nothing out of the ordinary and from my experience, a fairly run of the mill Python xml parsing design pattern. However, performance was an issue the bigger the file got.

My measurement was the number of xml data nests per second. (Each nest contains a consistent set of packaged financial data.) The initial parser was processing roughly 180 xml data nests per second (180 per/sec) as a baseline with files ~500MB in size. Any larger file sizes posed challenges for my test server to even handle. (8 core AMD Ryzen 7 5700U with 32GB RAM and a 2TB SSD) Yes, I could have just thrown a more powerful machine at the problem, but the single thread used by Python would limit the gains to CPU’s with greater single threaded speed and IPC while leaving most of the processing sitting by as a waste.

Upgrading Python! TLDR: ~ 9.5% improvement to ~ 197 nests per/sec.

Within the community, it is well known that the move to Python 3.11 would yield some definite speed improvements... so why not? The original parser was written and ran in a virtual environment with Python 3.8.5. I created a new virtual environment with Python 3.11.5. With just a few minor tweaks to the code base to accommodate the jump in Python version, I managed to get to about 197 nests per second (197 per/sec) - not bad, basically a free 9.5% increase in performance.

Switching to Streaming Parsing - TLDR: another ~17% improvement to 230 nests per/sec.

With the easy Python uplift out of the way, I began to look at the code in more detail and became convinced that streaming parsing was the way to go for gargantuan xml. Instead of loading an entire xml document file into memory, streaming is a method that nibbles its way through the xml. Kudos to be given to this stack overflow thread: https://stackoverflow.com/questions/7171140/using-python-iterparse-for-large-xml-files?noredirect=1&lq=1

Not desiring to write my own streaming parser, I installed the Python LXML module. LXML is written in C and promised much better performance. Well, it did not disappoint. After a week of learning, experimenting and code refactoring, I managed to move up to ~230 nests per/sec. This is about a 17% improvement over the move to Python 3.11, not bad at all and the most striking thing was the reduced cpu and memory burden on the test server. One thing to note regarding LXML, it has an ability to multithread that I did not use. I don’t know how much more performance I could have gotten but it was not in the same league of “significant” improvements people in the Rust and Elixir communities were advertising, so I passed on it.

Code Refactoring - TLDR: another ~9.5% improvement to 258 nests/sec.

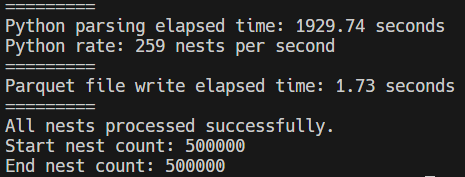

With some further code optimizations (better data structure use, condensed functions, for loop optimizations and unnecessary checks moved to unit testing) I managed to eke out just a bit more performance but pretty much hit a wall at 258 nests/sec. I was able to get faster speed on better hardware but that was not the solution I was looking for. As noted, I was looking for a software optimization and did not want to just throw better hardware at it.

Conclusion and Segue to Part 2

So starting from 180 nests/sec to 258 nests/sec was not at all bad. That represents a roughly 43% improvement within the Python code base alone. BUT... my Rust and Elixir friends were goading me with promises of much better performance in their respective languages.

I knew I could increase throughput by going horizontal - basically splitting the xml files into chunks and sending them to separate servers for "parallel" processing. I did start down this road but quickly ran into the buzzsaw of docker and kubernetes devops. This alone could be another blog! I have nothing bad to say about either tool but for my specific use case, they were not the right tools.

At this stage, I was ready to take the dive into another language. My own experience with the search of large text files using C earlier last year was also leading me to using a compiled language. I saw C rip through some large 50MB pure text files that were part of a search function in a few seconds. After some initial document browsing, I decided that the Rust quickxml streaming parser would be the better bet over what I saw in Elixir land so, onto the Rusty road I stepped. (To be fair, I've been wanting to learn Rust anyways.)

In Part 2, I’ll expand on my experience with Rust...

Hours

M-F 9:00-17:00 (CET)